Privacy and Security: The Real Story About Using AI at Work

Table of Contents

- Stop Letting Privacy Paranoia Kill Your Productivity

- The "I Can't Use This for My Work" Blocker

- The Critical Distinction: Data Processing vs. Model Training

- How to Use AI Safely at Work (It's Easier Than You Think)

- What You Can Safely Process (Even with Sensitive Data)

- A Practical Privacy-by-Design Approach

- The DeepSeek Warning (And Why It Matters)

- Questions to Discuss with Your Privacy Team

- The EU AI Act Reality Check

- The Bottom Line

- Contact 1GDPA

Stop Letting Privacy Paranoia Kill Your Productivity

This article is co-authored with our friend [Kris at 1GDPA] [Frank at CoderPad], who has extensive experience with privacy and AI [tech startups, data, design, and AI].

DISCLAIMER: This article is for informational purposes only and does not constitute legal advice; please consult a qualified attorney who is certified in data protection for legal guidance regarding your company's specific circumstances.

The "I Can't Use This for My Work" Blocker

Stop right here if you're thinking: "This sounds great, but I can't put personal data, confidential, or proprietary company information into AI tools."

You're not wrong to be cautious. But you're probably more wrong about what's actually risky than you think.

Most people operate under outdated assumptions about AI privacy that are costing them massive productivity gains. Let’s try to clear things up.

The Critical Distinction: Data Processing vs. Model Training

What most people think: "If I put my data into ChatGPT, OpenAI will use it to train their models and my competitors will see it."

The reality: There's a huge difference between AI processing your data and AI training on your data.

Processing: AI reads your document, helps you improve it, then forgets everything when the conversation ends. Think of it like hiring a consultant who signs an NDA and shreds their notes afterward.

Training: AI permanently learns from your data and could potentially reproduce it in responses to other users. This is what you actually want to avoid.

Here's the kicker: You can easily prevent training while still getting all the processing benefits.

How to Use AI Safely at Work (It's Easier Than You Think)

Every major Western AI provider gives you simple ways to turn off training entirely:

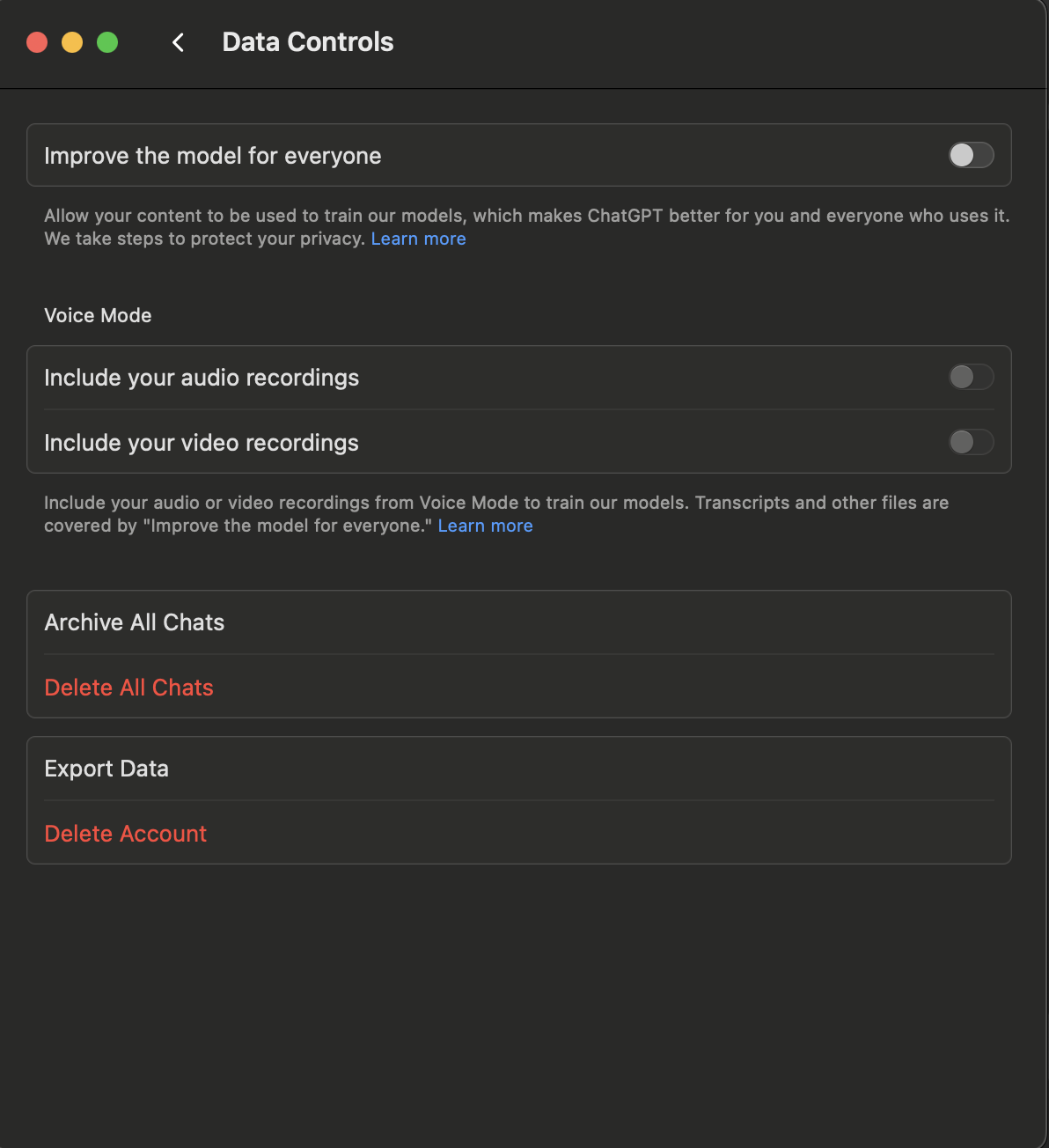

ChatGPT (Data controls FAQ):

Paid accounts can opt out of training by default

Free accounts can disable chat history (which also disables training)

Enterprise accounts never use your data for training

Ensure the toggles are OFF!

Claude (Is my data used for Model Training?):

Paid accounts don't use conversations for training

Enterprise accounts have additional privacy controls

Anthropic built strong privacy commitments into their design

Google/Gemini (Gemini Apps Privacy Hub):

Workspace accounts disable training by default

Consumer accounts let you disable data usage in settings

The simple rule: If you pay for the service, you can almost certainly opt out of training. If it's free, check the privacy settings.

What You Can Safely Process (Even with Sensitive Data)

Once you disable training, you can use AI to process:

Strategic documents: Competitive analysis, internal memos, strategic plans

Customer data: Feedback synthesis, support ticket analysis (remove personally identifiable information first)

HR content: Job descriptions, interview questions, performance review frameworks

Technical specifications: Code reviews, architecture documentation, API designs

The key: Remove or anonymize truly sensitive details like specific revenue numbers, customer names, and proprietary algorithms. But you can absolutely process the structure and content.

Side note: Follow your organization's AI and data protection policies—or work to change them. Many privacy specialists don't understand how AI tools actually work. Encourage everyone to become AI literate - a requirement for all developers and deployers of AI systems under Article 4 of the EU AI Act - through daily AI exposure and immersion.

A Practical Privacy-by-Design Approach

Level 1 (Safest): Use AI for structure and format only; add sensitive details manually afterward.

Example: "Create a competitive analysis framework for enterprise software companies" → you fill in the specific details later

Level 2 (Most Common): Anonymize sensitive details, but process real content.

Example: "Analyze this customer feedback and suggest improvements" but replace "Acme Corp" with "Client A"

Level 3 (Full Processing): Use AI with complete context when training is disabled, data is properly classified, and your company policies permit it.

Example: Full document analysis for internal strategy documents

The DeepSeek Warning (And Why It Matters)

Avoid AI tools from Chinese providers for any personal data or business content. Tools like DeepSeek operate under different privacy frameworks and data sovereignty rules.

Stick with Western providers (OpenAI, Anthropic, Google) that have clear, enforceable privacy policies and legal frameworks you can rely on in independent courts.

Questions to Discuss with Your Privacy Team

1. Do You Make Automated Decisions About People? (Article 22 GDPR)

The actual test: Does your business make automated decisions about people OR establish individual profiles of people AND do those decisions or profiles have a legal effect or significantly impact someone's rights?

Here's the kicker: Whether you use AI is irrelevant. If you do this type of processing, the rules apply whether you use AI, a spreadsheet, or a magic 8-ball.

Reality check: Most businesses don't do this. The EU Commission gives a clear example: an online loan application where there's no human in the loop. The European Data Protection Board clarifies that "solely automated decision-making is the ability to make decisions by technological means without human involvement."

Who actually does this: Organizations that specialize in lending, hiring, insurance, healthcare, and law enforcement.

The human-in-the-loop escape hatch: When humans stay in the loop and apply their judgment to decisions that have legal impact or affect human rights, it's not an automated decision. It doesn't matter if humans use a computer for assistance—even if that computer helps harvest or analyze data—as long as humans apply their own judgment.

The bottom line: AI tools work like any other software, except they're more accurate and effective at helping humans make good decisions.

If you do make fully automated decisions: Talk to your privacy team about safeguards like human intervention options, ways for people to express their viewpoints, and the ability for affected people to contest decisions.

2. Are You Transparent About Using AI? (Articles 13-15 GDPR)

The requirement: People whose data you use with AI systems must get clear notice of this processing.

What transparency actually means: Pull together a team from product, legal, risk and compliance, IT, HR, and marketing to confirm you provide appropriate notice in:

Public-facing privacy policies

Internal policies

Hiring processes

Any other business functions that use AI with personal data

The explainability requirement: Your notices must cover three things:

That you use automated decision-making or profiling with AI

Meaningful information about the logic involved (in simple terms)

The significance and consequences of such processing

Translation: Tell people you're using AI, explain how it works without getting too technical, and be clear about what it means for them.

3. What's Your Legal Basis for Processing Personal Data? (Article 6 GDPR)

Option 1 - Consent (The Gold Standard): Get explicit consent through clear unticked checkboxes and explanations. Consent is king and always will be.

Option 2 - Legitimate Interest (The Business Workhorse): You can process personal data with AI to satisfy your organization's legitimate interest under GDPR Article 6(1)(f). This works for fraud prevention, cybersecurity improvements, performance optimization, and product improvement.

The three-part legitimate interest test: When relying on legitimate interest, you need to pass all three parts:

Part 1 - Is your purpose legitimate? Pretty straightforward—fraud prevention, security, and product improvement generally qualify.

Part 2 - Is processing necessary and proportionate? You need to show that:

Data processing is necessary to achieve your purpose

No less intrusive means exist (like anonymization or pseudonymization)

Your approach is proportionate to your goal

Part 3 - Do individual rights override your business interest? Consider:

Risks to privacy, autonomy, and discrimination

Whether people would reasonably expect their data to be used this way

If the impact might be significant, can you mitigate risk through explicit consent or stronger safeguards?

Best practice playbook: Always be transparent—provide privacy notices, allow opt-outs where feasible, and consider doing a data protection impact assessment (DPIA) or fundamental rights impact assessment for high-risk AI systems.

The EU AI Act Reality Check

Be skeptical of complaints about European over-regulation. The EU AI Act targets genuinely problematic AI uses—think social credit scoring systems or unregulated police facial recognition—not your everyday business tools.

For general-purpose AI like ChatGPT, Claude, or Gemini? The Act barely restricts you at all. It's designed to discourage dystopian surveillance and set reasonable guardrails for high-risk AI applications that impact human rights.

The EU actually encourages small and medium businesses to use these technologies with proper safeguards. Small businesses should be able to write better code, improve customer interactions, and automate mundane manual processes to improve business productivity. The EU AI Act permits and encourages this type of activity.

The Bottom Line

GDPR and the EU AI Act don't prevent you from using AI tools—they just require you to protect people's personal data responsibly.

Here's what this means in practice:

Use paid AI services and disable training

Remove or anonymize sensitive details before processing

Be transparent about your AI use in privacy policies

Keep humans in the loop for important decisions

Stick with Western AI providers for business content

Most "privacy concerns" about AI are really "I don't understand how this works" concerns. Now you do.

Stop letting privacy paranoia cost you the productivity gains that could transform how you work. With basic precautions, you can use AI safely and legally for almost everything that matters to your business.

Contact 1GDPA

If your company is struggling to navigate this rapidly evolving AI landscape, 1GDPA can help. They offer tailored services in AI governance, data protection, and strategic planning for small and mid-sized enterprises.

Have you been avoiding AI tools due to privacy concerns? What's holding you back from implementing these safeguards at your organization? I'd love to hear about your specific challenges and wins in the comments.

###